SPHP dataset. Our motion vector sensor (MVS) extracts edge images and two-directional motion vector images at each time frame. We also provide annotated body joints for human poses and corresponding grayscale images.

Abstract

We propose a sparse and privacy-enhanced representation for Human

Pose Estimation (HPE). Given a perspective camera, we use a

proprietary motion vector sensor (MVS) to extract an edge image and

a two-directional motion vector image at each time frame. Both edge

and motion vector images are sparse and contain much less information

(i.e., enhancing human privacy). We advocate that edge information

is essential for HPE, and motion vectors complement edge information

during fast movements.

We propose a fusion network leveraging recent

advances in sparse convolution used typically for 3D voxels to

efficiently process our proposed sparse representation, which achieves

about 13x speed-up and 96% reduction in FLOPs. We collect an in-house

edge and motion vector dataset with 16 types of actions by 40 users

using the proprietary MVS. Our method outperforms individual modalities

using only edge or motion vector images. Finally, we validate the

privacy-enhanced quality of our sparse representation through face

recognition on CelebA (a large face dataset) and a user study on our

in-house dataset.

SPHP Dataset

| ID# | Class# | Movement | ID# | Class# | Movement |

|---|---|---|---|---|---|

| 1 | 1 | Arm abduction | 9 | 3 | Walk in place |

| 2 | 1 | Arm bicep curl | 10 | 3 | Squat |

| 3 | 1 | Wave hello | 11 | 4 | Jump in place |

| 4 | 1 | Punch up forward | 12 | 4 | Jog in place |

| 5 | 2 | Leg knee lift | 13 | 4 | Jumping jack |

| 6 | 2 | Leg abduction | 14 | 3 | Standing side bend |

| 7 | 2 | Leg pulling | 15 | 4 | Hop on one foot |

| 8 | 4 | Elbow-to-knee | 16 | 3 | Roll wrists & ankles |

We introduce the Sparse and Privacy-enhanced Dataset for Human Pose

Estimation (SPHP), which consists of synchronized,

complementary images of edge and motion vectors along with ground

truth labels for 13 joints.

We collect data from 40 participants (20 male and 20 female).

Participants performed 16 fitness-related actions, which are

categorized into four classes based on the type of movement:

- Class 1: upper-body movements

- Class 2: lower-body movements

- Class 3: slow whole-body movements

- Class 4: fast whole-body movements

Sparse Representation

- Edge Image MVS uses an efficient hardware implementation of edge detection, similar to Canny edge detection, to generate edge images. Each pixel in the edge image has a value within the range of $\{0, 255\}$. A higher value indicates a stronger intensity of the edge.

- Motion Vector Inspired by the motion detection of the Drosophila visual system and designed with patent-pending pixel-sensing technology, MVS detects vertical and horizontal motion vectors, denoted as $MV_X$ and $MV_Y$, by analyzing changes in illumination. Each value falls within the range of $\{-128, 128\}$. The magnitude and sign of a value represent the strength and direction of motion.

Fusion Model

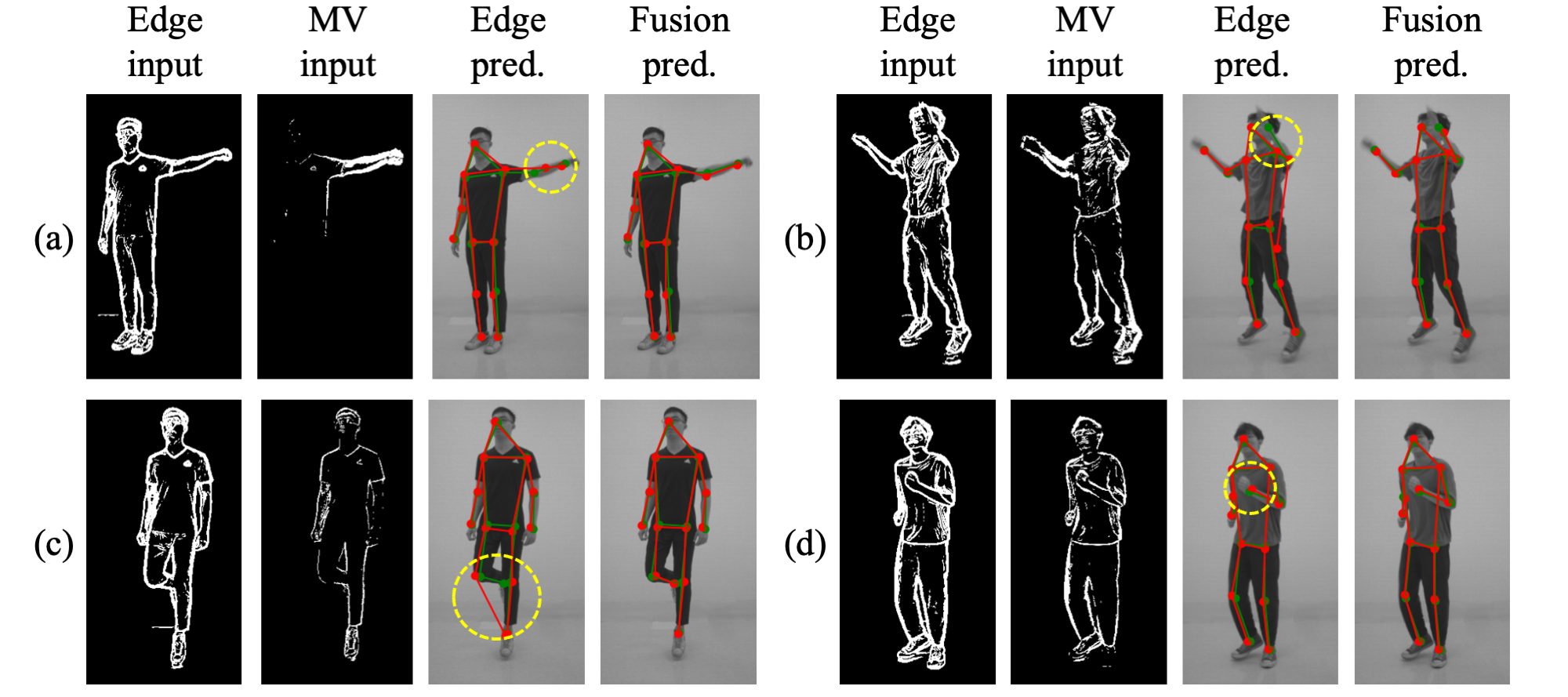

Edge and motion vector information complement each other. While edge

is sufficient for detecting clear and non-blurred body joints,

incorporating motion vectors into our model can effectively address

the challenges posed by fast movements and overlapping boundaries,

which may confuse edge-based HPE models.

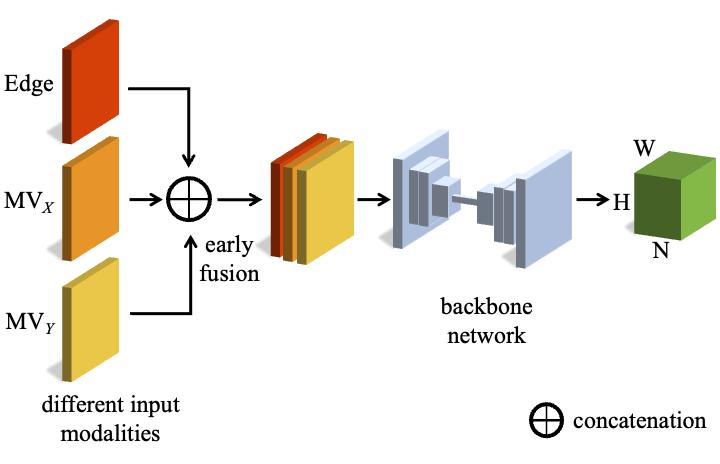

Hence, we aim to combine the complementary information of edge and

motion vectors while keeping our model compact and efficient. We

directly concatenate an edge image and a two-directional motion

vector image, proposing the early fusion model (referred to as FS)

as illustrated in the figure below. Our FS model can leverage

various single-branch network architectures designed for compactness

and efficiency.

Evaluation Metric

Mean Per Joint Position Error (MPJPE) is chosen for evaluation. It calculates the Euclidean distance between predicted positions $\hat{y_{i}}$ and ground truth positions $y_{i}$ for each joint, where $N$ is the number of joints.

Quantitative Results

| Backbone | Params# | Input | Traditional Convolution | |

Sparse Convolution | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | Mean | C1 | C2 | C3 | C4 | Mean | ||||

| DHP19 | 218K | GR | 2.62 | 3.08 | 3.33 | 3.62 | 3.20 | |

- | - | - | - | - |

| MV | 16.96 | 6.50 | 6.43 | 5.11 | 8.66 | 56.96 | 27.12 | 25.86 | 14.12 | 30.20 | |||

| ED | 3.14 | 3.64 | 3.71 | 4.03 | 3.65 | 5.18 | 6.47 | 5.94 | 6.76 | 6.10 | |||

| FS | 3.36 | 3.32 | 3.56 | 3.88 | 3.56 | 5.00 | 6.52 | 6.10 | 6.45 | 6.01 | |||

| U-Net-Small | 1.9M | GR | 1.82 | 2.08 | 2.13 | 2.48 | 2.15 | |

- | - | - | - | |

| MV | 19.56 | 5.41 | 5.69 | 3.82 | 8.52 | 54.29 | 20.93 | 19.25 | 8.11 | 24.85 | |||

| ED | 3.20 | 3.49 | 3.19 | 3.49 | 3.35 | 3.35 | 3.78 | 3.48 | 3.95 | 3.65 | |||

| FS | 3.32 | 2.91 | 2.79 | 3.18 | 3.07 | 3.42 | 3.61 | 3.41 | 3.69 | 3.54 | |||

| U-Net-Large | 7.7M | GR | 1.73 | 2.14 | 2.00 | 2.33 | 2.06 | |

- | - | - | - | - |

| MV | 18.76 | 5.27 | 5.54 | 3.79 | 8.25 | 52.08 | 20.00 | 18.65 | 7.77 | 23.86 | |||

| ED | 2.95 | 3.08 | 2.86 | 3.27 | 3.05 | 3.32 | 3.70 | 3.47 | 3.86 | 3.60 | |||

| FS | 2.68 | 2.94 | 2.79 | 3.14 | 2.90 | 3.32 | 3.64 | 3.41 | 3.64 | 3.50 | |||

Qualitative Results